Recommended for you ― and how it begun to disappear

In the last 10 year circa, the Related Videos on Youtube were composed by two large group:

Content related

If you are watching someone painting, you get suggested other painters.

Often are related content from the same source author.

Personalized suggestion

Based on your past activities, if you are watching a painter you might even like other visual arts

And so, a few unrelated recommendation show up because you behave similarly to other anonymous stranger.

Despite the total asymmetric of decisional power of YouTube, at lease these video were explicitly marked with a label: Recommanded for you. That was a small form of transparency on that attention stealing machine that Google bought.

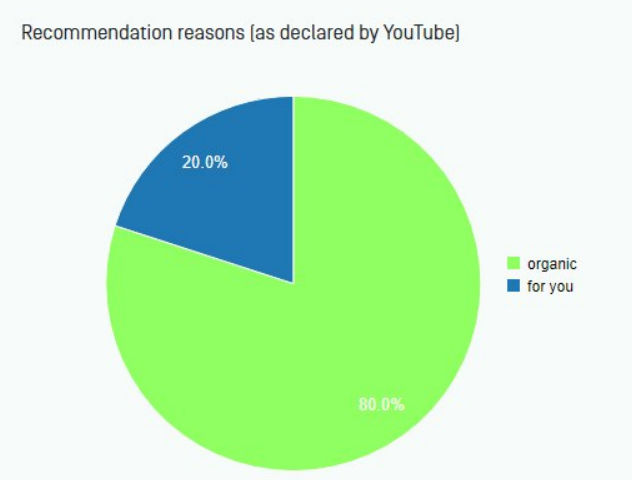

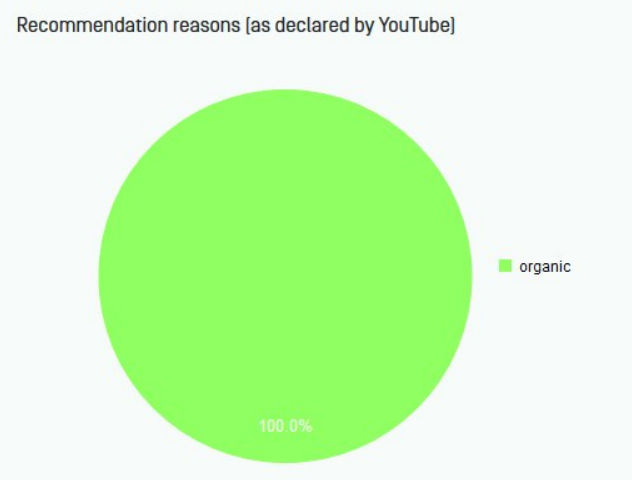

Since the beginning of October, altought we didn’t record any meaningful change in the recommender system, Youtube stop to be explicit. This graph talks about a decline of transparency, and this small page talks about how we register it.

The percent% is computed by confronting the total amount of related video and the explicitly Recommended for you, as they were served to our (too small? then join us!) group of 185 partecipants.

A step back

This is the root cause of the visually compelling metaphor like the Rabbit Hole Effect, which Youtube denies.

How we know it

Some people install our browser extension, and this scrape for them a few portions of their youtube pages for. We designed the technology to monitor and understand YouTube and NOT profile the volunteers participating in data donations.

We document the data minimization, the code is free software, and we care to explain the (open and replicable) research methodology! We believe anyone might want to audit the Youtube algorithm. After all, if Google experiments with you, why you shouldn’t experiment with them?

Intended side effect

Then with out analysis we extract informations on how YouTube personalize recommendations, homepages, and search results. A step in this process is to filter what content-related from what is profile-related.

Recommended for you was a small information that display, somehow, the interest of Google in being explicit on what was algorithmically selected for you compared to what is (algorithmically, again) selected because content related. Well: not anymore, it’s gone!

This might not be a surprise if you were using the browser extension, because the stats display gradually changed from left to right:

September '20

October '20

Detailed report: stats on kind of related video since Sept'20 ― till 11th October 2020

(The details also report the amount of live video present in the recommendation. This is not relevant for this blogpost.)

References and ongoing discussion ― this section would be updated

ABOUT us ― project Twitter, Facebook page, Mattermost chat.

Please consider this project, despite unique in its model of data collection, sharing, and potential reuse by you like anybody else, is currently unfunded and maintain with not small difficulties.

A thread from Reddit, September 2020: Is it me or has youtube recommended system gotten way less "explorative"?.