WEtest YOUtube

* * *

A collaborative observation of the Youtube algorithm during the Covid pandemic.

(Academic publication)

(Call to action)

(Analysis notes)

Methodology

March 25th 2020 we openly asked to:

Watch five BBC videos about Covid-19 on Youtube.

In five different languages.

All togheter, compare the algorithm suggestion.

And learn how to wash hands.

What we observe:

- Recommended videos: Where the personalization algorithm takes action

- Participants comparison: Personalization can only be understood by comparing different users

- Content moderation: What about disinformation? Is there a worst curation on non-english lenguages?

ANONYMIZATION PROCESS

-

01. Unique and secret token

Every participant has a unique code attributed to download his/her evidences

-

02. Your choice

With the token, participants can manage the data provided: visualize, download or delete

-

03. Not our customer

We are not obsessed by you ;) We don't collect any data about your location, friends or similar

-

04. WEstudy YOUtube

We collect evidence about the algorithm's suggestions, like recommended videos

Research Protocol

We asked participants to open, for at least 10 seconds,

a sequence of 5 different BBC videos about Covid-19.

- Open YouTube Homepage

- Open the Chinese video.

- Open the Spanish video.

- Open the English video.

- Open the Portuguese video.

- Open the Arabic video.

- Open again YouTube Homepage

F I N D I N G S

* * *

A small summary of the most interesting results

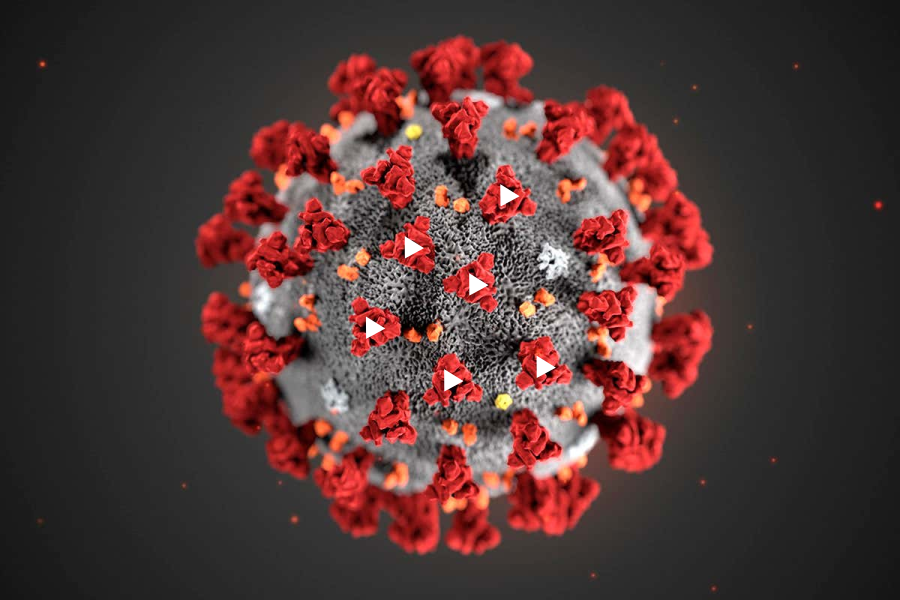

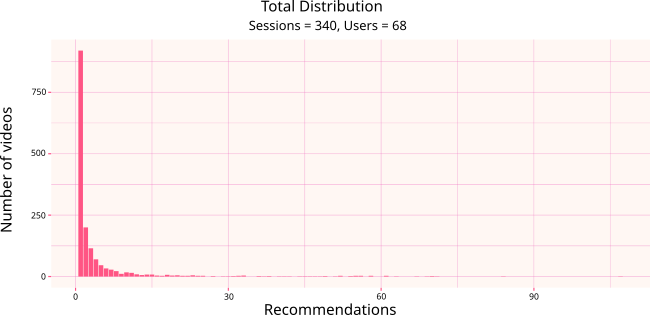

Distribution of Recommendations

* * *

The vast majority of videos are recommended very few times (1-3 times)

-

-> Summing up, 57% of the recommended videos have been recommended only once (to a single partecipant). -

-> Only around 17% of the videos have been recommended more than 5 times (out of 68 partecipants).

For example, the first bar represents the videos recommended once. They are more than 800.

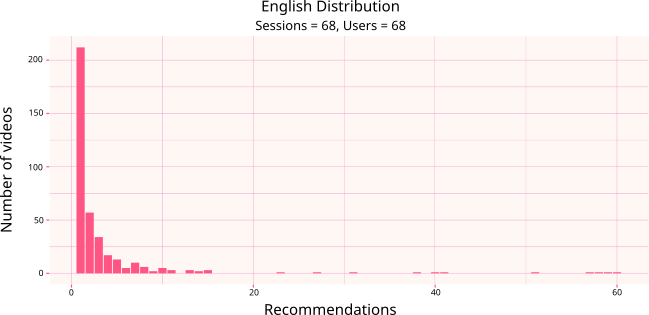

Distribution of Recommendations

* * *

Analyzing the recommendations of each signle video, the disctibution doesn't change.

-

-> Here you can find the distribution graphs for each lenguage. -

-> The only video suggested to all the participants is a live-streamed by BBC in Arabic. It appears as a recommendation watching the Arabic video.

For example, the first bar represents the videos recommended once. They are more than 200.

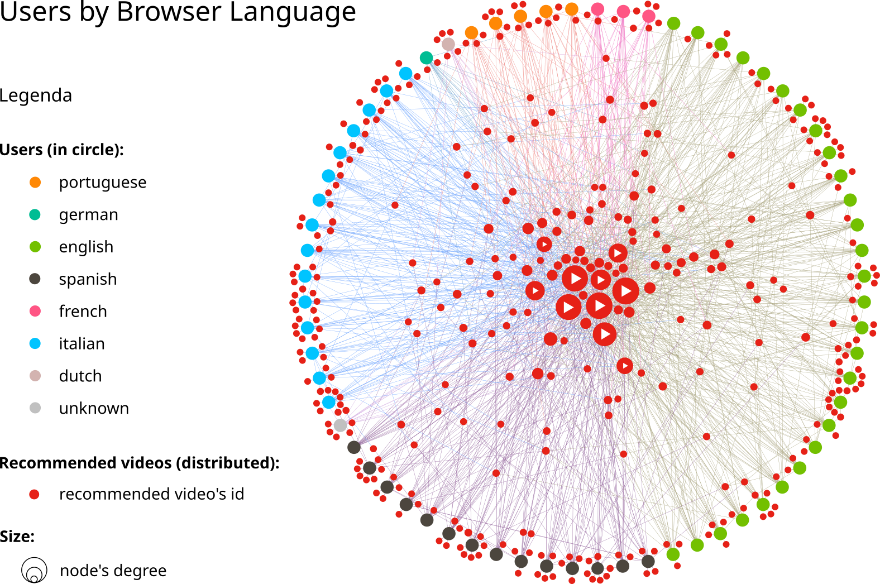

Users in a circle watching the same videos and get really differentiated suggestions (red nodes)

Recommendations Network

* * *

Here we can see the network of recommended videos generated by the Youtube algorithm, comparing the participants.

-

-> Graph of the videos suggested to the participants while watching the video “How do I know if I have coronavirus? - BBC News” -

-> The nodes outside the users in cyrcle are videos suggested to just one participant, they are the most personalized.

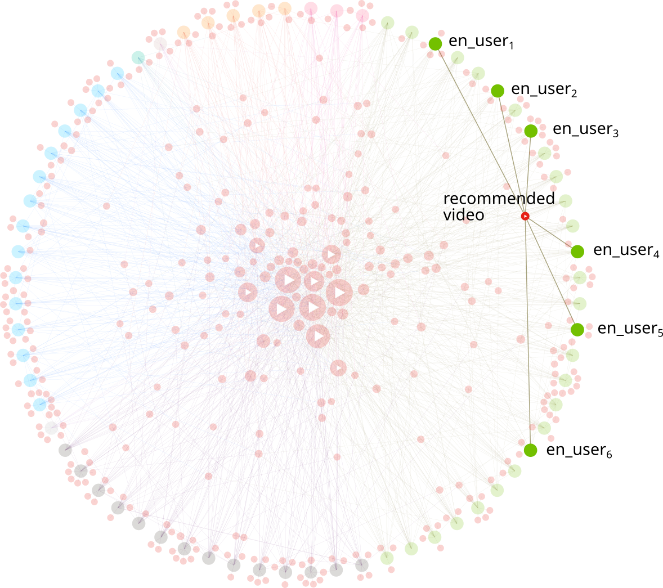

Same graph as the previous slide, with some nodes highlighted.

Recommendations Network

* * *

An example of video suggested just to english-browser participants

-

-> A basic example of how our personal information (as the language we speak) is used to personalize our experience -

-> Here we have a pice of the filter bubble: the algorithm devides us from other users usign our personal information

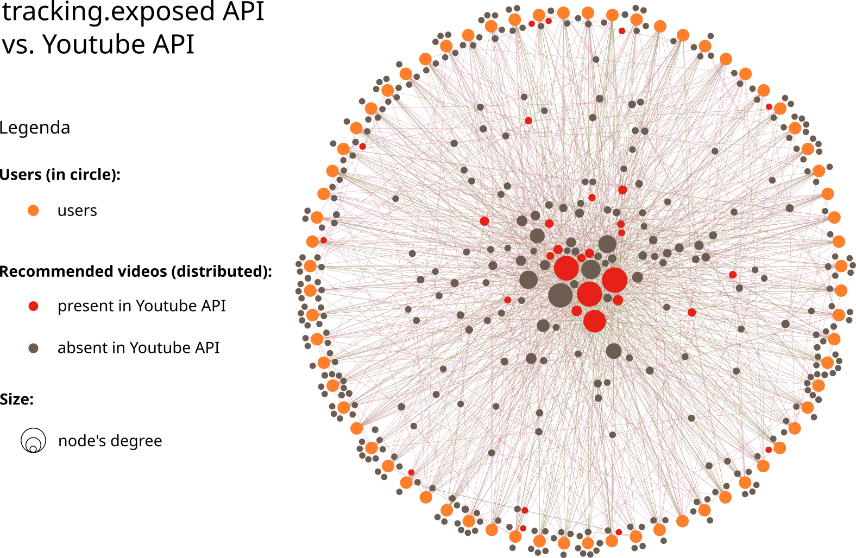

The same graph as before, but the videos that Youtube says should be recommended are red.

Official API? No thanks!

* * *

A comparison between offical Youtube data (OfficialAPI) and our independently collected dataset

-

-> Youtube data are not a good starting point to analyze...the Youtube algorithm! -

-> That's why passive scraping tools like youtube.tracking.exposed are a good way to analyze the platform independently!

Take Out Message

* * *

-

Filter bubbles do exist, and we can measure them

-

Never trust offical API for indipendent research